In our previous blog post Docker setup and installation on Ubuntu , we have done installation of docker. Now before diving deep in to hands on of Docker, its time to understand what are all components or terminology.

Following are some major components of Docker-

- Docker-Machine

- Docker Engine

- Dockerfile

- Docker Images

- Docker Containers

Docker-Machine – This can also be referred as Docker Host. If you are working on a Linux machine then your Linux system itself is a Docker-machine / Docker host. But if you are working with Boot2Docker on windows, then your Linux VM inside Oracle virtual box is the docker-machine. In case of Windows(Boot2Docker), you can have multiple docker-machine and can also create new using below command-

docker-machine create --driver virtualbox <suitable machine name>

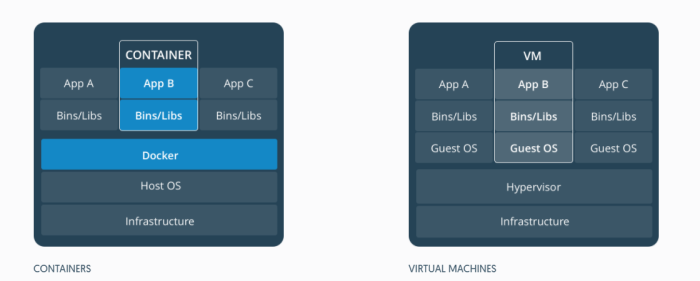

Docker Engine – Docker engine is the core of Docker, it is the main software/package which drive all docker commands and enable user to create images and containers. This is a lightweight and powerful open source containerization technology combined with capabilities to build and ship your applications

DockerFile – Dockerfile is a plain text file with no extension. It has series of instructions which needs to be perform to create a docker image. Instruction are some docker specific commands which when executed in sequence generates a docker image. If you have a dokerfile, you can easily create an image from it with a custom name. Every instruction when executed, stored in a layer. A sample dockerfile with python looks like below-

FROM ubuntu:14.04

RUN apt-get update && \

apt-get install -y python python-dev python-pip python-virtualenv && \

rm -rf /var/lib/apt/lists/*

WORKDIR /data

CMD [“bash”]

Docker images – Docker image is a blue print of what you need in a container. Docker image is build up from a series of layers. Each layer represents an instruction in the image’s dockerfile. Each layer except the last one is read-only. To understand the concept at high level, you can consider an image as a Class and container as object of that class. Object is a run time entity similar to container. Like a class can have many objects of it, An image can have multiple containers out of it.

Docker container – Docker container is a lightweight execution environment to build and ship your application. This is just your docker image in action.

Lets understand the difference with an analogy. Consider your Dockerfile as some Abc.java file. Now when you build/compile Abc.java file you get .class file (docker image). Now when you can create multiple objects(containers) of class Abc.java(docker image), and these objects can be referred as Docker containers. So in the end the run time entity on which you will actually work is Container.

In the next blog post , we would be learning about running your local source code in to container

To know more in details, hands-on and for personal / corporate training please reach out to – gauravtiwari91@yahoo.com